Learning the Docker Basics 🐳

by Nicklas EnvallThis article is not intended to be a "copy-paste into your terminal tutorial". I couldn’t find a straight to the point article that incrementally introduced each concept of Docker, so I wrote my own. Each section describes a concept, the sections are supposed to be read incrementally, but they can be read individually if you already comprehend the previous section. In the end, the article brings everything together with a practical exercise. The table of contents are:

- Overview of Docker

- What's a Docker container?

- What's a Docker image?

- What's a Docker volume?

- The Docker Compose Tool

- Why do we use Docker Swarm?

- Creating our Docker Environment

This is also not a guide on how to use all the Docker commands, you should use the docs for that, I'll just introduce some commands where it's necessary. We will not cover at all how to Install Docker, there are already well-written guides on how to do that here.

1. Overview of Docker

Docker helps us create, manage, orchestrate, and deploy containers. Docker does so by giving us an engine that contains a Docker Client and Docker Server/Daemon. The client and the server are important because Docker uses a client-server architecture. How does that work? Well, we have a Docker host which is a computer or virtual machine running Linux. The Docker host runs a daemon, and that daemon manages objects like containers, images, and volumes. We never edit our Docker objects directly, instead, we use a Docker client to send commands to the Daemon via an API.

Docker has a "write once, run anywhere" mentality. Docker gives you a lot of tools that make it easier to achieve that. Docker also has a motto, “batteries included but removable”, which means that it should give you tools, but you should be able to pick and replace things as well. In the next sections, we’ll look at Docker objects such as containers, images, and volumes.

2. What’s a Docker Container?

A container is an isolated instance on a host where we can run our application. Containers are based on Docker images that contain all the dependencies and configurations for an app. When we start a container, we are actually putting a small writable filesystem on top of an image. We will look at how images work in the next section. For now, it's sufficient to understand that a container is an isolated instance.

If you know what a Virtual Machine (VM) is, then you might think that a container seems like a VM. This reasoning is normal because they are related conceptually, but they are very different. The complete difference is beyond the scope of this article. But to put things into perspective, ten VMs require ten OS, while ten containers require one OS. This makes containers more lightweight, quicker to start, and cheaper because we don't have to buy a license for each OS.

Docker containers are not only lightweight and easy to use, but they also solve the "it works on my machine" problem, where the app works on your machine but not your coworkers' machines. That problem is solved because containers are run in a Docker daemon process, which we communicate with via our Docker Client.

Running a Docker container

Now we'll familiarize ourselves with running containers manually. To start running a new container, we use the docker run [OPTIONS] IMAGE [COMMAND] [ARG...] command. Open your terminal and type the following:

$ docker run -i -t ubuntu /bin/bash

You have now told Docker to create a new container that uses the ubuntu image. You also told Docker to run the /bin/bash command, which opens the bash shell. Docker first checks if you already have the ubuntu image available locally, else it’ll download it. Then based on that image, Docker will create a container. Your terminal should look something like this after doing the following commands:

root@a42bcced7654:/# root@a42bcced7654:/# ls bin boot dev … … ... root@a42bcced7654:/# echo 'hello' hello

Run exit to leave the container. The container is itself not deleted even though you just left it. We can use the docker ps command to see all the running containers. We can also add -a which will show all the containers. Now run, docker ps -a and you should get a list of all containers.

You should see that Docker automatically gave our container a random name and an id. Note that we could've deliberately given Docker a name to use for our container. We can use the container's name and id to tell Docker to do things with that particular container. For example, let’s step back into our container:

C:\Users\Nicklas>docker start [container-name] { should show the container name } C:\Users\Nicklas>docker ps { should show the container here now as active } C:\Users\Nicklas>docker attach [container-name]

We just started the container in the background, then we entered the container, with the attach command. Containers can be run in the background, from the get-go by using -d which stands for detach. Now finally, to remove a container, you first must stop the container with the docker stop command followed by using the docker rm command.

3. What’s a Docker image?

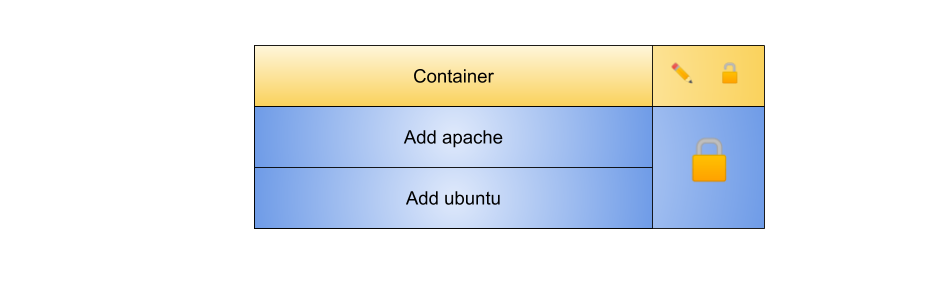

Images are templates that create containers. This means that an image contains instructions on how to create a container. But images are more than just mere instructions. An image is a stack of immutable layers. Each layer corresponds to a filesystem that is derived from an instruction. When a container is created, a read-and-write layer is put on top of read-only layers. The image below should clarify how it works:

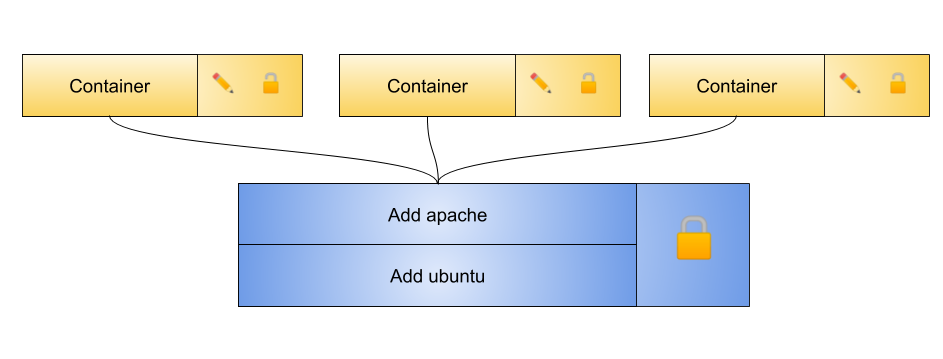

So our first layer is where we added Ubuntu, the second layer is where we added Apache. It might seem like a disadvantage that the layers are read-only, but since the layers are immutable, we can share them amongst many different containers like:

This is resourceful and makes it quicker to create and start containers. Curious how the containers can access the layers? A hint is that Docker uses something called “union mounting” to let all the filesystems appear as one.

How to build an image with a Dockerfile

Now, since images are just a set of layers, we can use the current state of a container and create an image out of it. But to do this manually each time we needed an image, would not be efficient, scalable and it would be error-prone. So we can instead create a file called Dockerfile. The Dockerfile contains instructions that build an image. Let’s look at the format of a Dockerfile:

# Comment INSTRUCTION arguments

There are many different instructions available, here are some common ones concisely explained:

-

FROM <image>: sets the base image. This instruction is always required and must be the first instruction (unless FROM already has been used). -

WORKDIR </path/to/workdir>: creates (if non-existent) and sets the working directory. The directory becomes the working directory for instructions that follow. -

COPY <src> <dest>: copies files or folders from <src> with regards to the build context of your container's path at <dest>. -

RUN <command>: will create a new layer and execute the provided commands. -

EXPOSE <port>: lets Docker knows the network port that the container uses during runtime. -

CMD ["executable","param1","param2"]: executes the command(s) once the container has started. Only oneCMDcan be used per Dockerfile.

We use instructions, like the ones displayed above in a Dockerfile and then we use the docker build command to build our image. Now it’s important to understand that where you build your image becomes the context of your image because the context gets copied over to the container. The command for building an image looks like this:

docker build [OPTIONS] PATH | URL | -

You’ll commonly see docker build ., the dot indicates that the current directory is going to be the context of the image. In the last section of this article, we’ll create our own Dockerfiles, which will make it easier to understand what Dockerfiles is all about. If you’re having a hard time understanding how instructions work, then you should use read the Dockerfile reference.

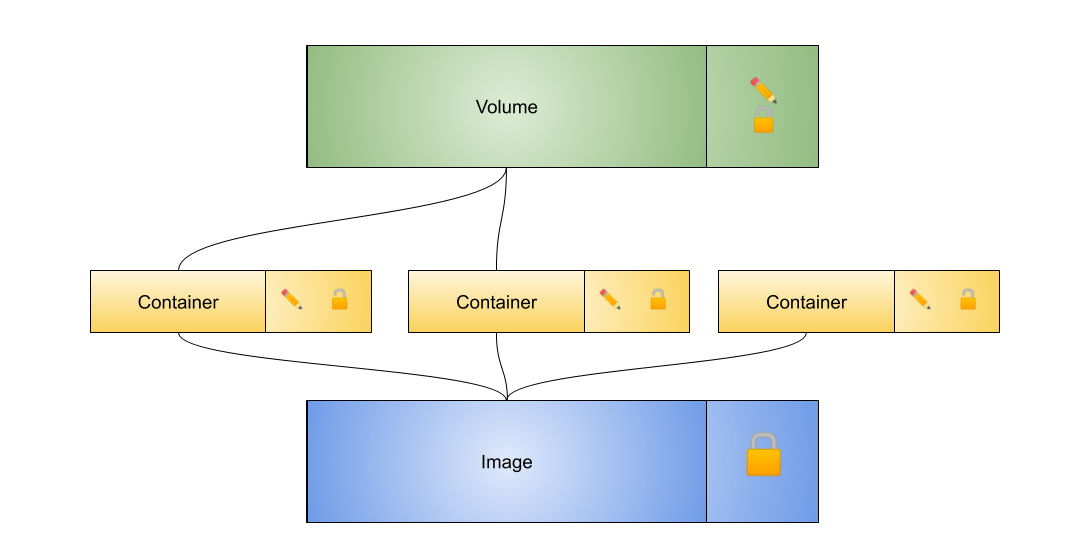

4. What’s a Docker Volume?

Docker volume allows us to persist and share data between containers. Containers as we now know, have their own filesystems. Containers cannot access each other's filesystems, and on top of that when a container is deleted, its filesystem is removed. So what do we do when we want to persist data or have our data accessible by multiple containers? The answer is, that we use volumes.

Volumes are files or folders that are located on the host’s filesystem. So, the contents of the volumes are not copied into containers, which means that the containers can share the same source of data. If a container changes something in a volume, then the changes are made directly to the volume. Which subsequently means that other containers will see the change.

Here are some positive points with volumes:

- The size of the container does not increase since it’s the volume that increases.

- The volume contents may persist even though a container gets destroyed.

Bind Mounts vs Volumes

You’ll encounter something called bind mount, do not mistake this to be a volume because it’s not. Bind mount and volumes are closely related, hence the confusion. Docker gives us two common alternatives for storing persistent files on our host, which are bind mounts and volumes.

So what separates the two? Well, with bind mounts our data are mounted into the containers, the files and folders can be located anywhere on our host’s filesystem. Two downsides of bind mounts are that other processes outside of Docker can change the data, we also cannot use Docker CLI commands to manage the data. Volumes, on the other hand, are also stored on the host’s filesystem, but in a folder that Docker has control over, let’s refer to it as the Docker area. With volumes, we can use Docker CLI commands, and Docker will also create volumes for us automatically if we forgot to add one when implying that we want to use a volume when starting a container.

So should you use bind mounts or volumes? That’s really up to you, but the Docker documentation clearly says, "volumes are the best way to persist data in Docker".

How to use a Docker Volume

Now let’s create a volume and then run two separate containers just to see if what I told you is true. Run the following commands one at a time:

docker volume create hello_volume docker volume ls

As you see, we have now created a Docker volume. The next step is to create a container that uses this volume.

docker run --name hello_container1 -v hello_volume:/src -i -t ubuntu /bin/bash

We’ve now created a container and named it hello_container1, we also said that we want to access the hello_volume in /src. Now do the following:

cd src ls touch index.js exit

The src folder should initially be empty, so you create a file called index.js followed by exiting that container. Now we create another container that's going to use the same volume:

docker run --name hello_container2 -v hello_volume:/src -i -t ubuntu /bin/bash cd src ls

As you see, the index.js is there, even though hello_container1 and hello_container2 have their own filesystems. You can also use the inspect command on the volume like: docker volume inspect hello_volume, which will give you some information about the volume. You execute docker volume --help when you want a concise list of the commands you can do.

5. The Docker Compose Tool

Docker Compose is a tool used for defining, running, and managing a multi-container application. Compose makes it easier for us to define services that we want to run together but in an isolated environment. Compose makes it easier because we define the services that make up our app in a YAML-file called docker-compose.yml. Then we can run a single command like, docker-compose up, to start all the services that make up our app. Here are three examples of things that compose can help us with:

- Run multi-container applications with ease.

- Automate testing environments.

- Setup testing environments for CI, like Travis.

For a more concrete example, let’s say have a web application that is dependent on multiple services. Either we can set up each service ourselves manually, or we define them in a YAML file and run a command like docker-compose up -d.

The YAML file explained

A positive of the YAML-file is that we get a snapshot of the entire project. Let’s look at the structure of a .yml file:

version: "" services: … volumes: … networks: ...

Volumes and networks are optional, but you’ll need to define at least one service. The compose file has different formats, which are dependent on the version you use. You declare which version you're using at the top of the file. Currently, it’s recommended to use the newest version 3. Furthermore, we define the services like:

version: "3.7" services: container-name: container-options container-name: container-options

As you see, we can define multiple services that either work together or just in the same stack. How you configure the services are up to you and you have lots of options. You can read more here, for more information about the available options.

The Docker Compose command

The docker-compose command is dependent on the context it is run in, by using the context's YAML-file. This means that docker-compose will only care about the context's service stack, ie the containers you defined in docker-compose.yml, all others will be ignored. Here are a couple of useful commands:

docker-compose up: starts, builds, (re)creates, and attaches to containers for a service.docker-compose down: stops and removes the Docker objects created byup.docker-compose kill: forceful stop, may result in loss of data.docker-compose stop: graceful stop.docker-compose rm: remove containers with state of exit.

6. Why do we use Docker Swarm?

How Docker Swarm works is beyond the scope of this article. But why do we use Docker Swarm? Let's imagine a web application that is run in a Docker container. This particular container can handle 100 requests per second. If you want the app to be able to handle more requests you could create a new container if you have enough resources available on the node (the machine) that the container is being run on. Then you would use load balancing to ensure that the incoming requests are routed correctly. But what happens when your machine’s resources are all being used? A naive approach could be to simply buy a bigger better machine, which could work, but then yet again what happens when we later need even more resources?

A better solution could be to buy more machines and connect them, which means we do not need to replace a current machine with a better machine, we instead just add another one. This is not only good for workload but also availability since if have three nodes but one goes down our web application will still be reachable. Combining nodes like this is known as a cluster, the definition of a cluster is, ” a group of machines that work together to run workloads and provide high availability”.

Managing all of these nodes separately would be a real hassle. Now, this is where Docker Swarm Mode comes in, it allows us to manage this cluster. A swarm in Docker terms is a cluster of nodes that have the Docker Engine installed. Docker Swarm Mode hides a lot of complexity for us and saves us hours of configuration and managing time. There’s more to it, but this is the gist of it, hopefully, that clears up some thoughts you might’ve had.

7. Using Docker in a Project

The goal of this section is to Dockerize a development environment for a MERN app. This section is going to be very concise and for illustration purposes only, so it's okay not understanding everything.

We’ll set everything up and then breakdown what we just did. So, start with creating empty folders:

mkdir docker cd docker mkdir backend mkdir frontend

The backend folder

Start with executing the following one by one:

npm init npm install express --save npm install mongodb --save

Make sure your package.json has a script like "start": "node server.js". Now, create an app.js file and add the following code to it:

const express = require('express'); const app = express(); const mongo = require('mongodb').MongoClient; const PORT = 3001; const DB_URL = 'mongodb://mongodb:27017'; const COLLECTION = 'products'; const getCollection = async (dsn, collectionName) => { const client = await mongo.connect(dsn, { useUnifiedTopology: true, useNewUrlParser: true }); const db = client.db('shop'); const col = await db.collection(collectionName); return { client, col }; }; app.get('/', (req, res) => res.send('Hello World!')); /* * Always inserts { name: 'dark roasted coffee'} on each GET-request */ app.get('/insert', async (req, res) => { try { const { client, col } = await getCollection(DB_URL, COLLECTION); await col.insertOne({ name: 'dark roasted coffee' }); client.close(); res.send('Success!'); } catch (err) { console.log(err); res.send('Fail!'); } }); /* * Fetches all products */ app.get('/fetch', async (req, res) => { try { const { client, col } = await getCollection(DB_URL, COLLECTION); const result = await col.find({}).toArray(); client.close(); res.json(result); } catch (err) { res.send('Fail!'); } }); app.listen(PORT, () => console.log(`Example app listening on port ${PORT}!`));

Next, create a file called Dockerfile with the following content:

FROM node:10 WORKDIR /app COPY package*.json ./ RUN npm install COPY . . EXPOSE 3001 CMD [ "npm", "start" ]

Now also create a .dockerignore file and add the following to it:

node_modules

The frontend folder

Start with executing:

npx create-react-app .

Then add the following to a Dockerfile:

FROM node:10 WORKDIR /app EXPOSE 3000 CMD [ "npm", "start" ]

YAML-file for Docker Compose

Now make sure you’re in the docker directory and create a docker-compose.yml file. Add the following to it:

version: "3.7" services: backend: build: ./backend/ ports: - "3001:3001" depends_on: - mongodb networks: - app_net frontend: build: ./frontend volumes: - "./frontend:/app" ports: - "3000:3000" environment: - CHOKIDAR_USEPOLLING=true networks: - app_net mongodb: image: mongo volumes: - "./data/db:/data/db" ports: - "27017:27017" networks: - app_net networks: app_net: driver: bridge

Using Docker and Breakdown

Now we can start the entire project easily:

docker-compose build docker-compose up

Don't be discouraged if it takes some time, this is since you don't have the images available locally. If any errors occur it could be due to numerous reasons. Make sure MongoDB is not already running. If your React app is loading but not hot reloading on changes then read this, this is the reason why I added CHOKIDAR_USEPOLLING:true. The next step would be to make sure the frontend can interact with the backend, I’ll leave that as an exercise.

Let’s breakdown what we just did. We have two Dockerfiles, one in /backend and one in /frontend. There’s a big difference between the two Dockerfiles. With /frontend/Dockerfile we are not copying anything into the container, but we are creating a working directory called /app and using /frontend as a volume. With /backend/Dockerfile, on the other hand, we are copying the entire project into the container. Of course, we could have used /backend as a volume, but this illustrates the difference. Note that we now have to manually run npm install inside /frontend.

We also have a docker-compose.yml file in /docker. The docker-compose.yml file glues everything together by defining three services. We do not have a Dockerfile for mongodb since we are declaring we want to use the mongo image. Let’s look at the backend service. With the build property, we are defining the context, then we are using depends_on: mongodb so the mongodb service always gets started before we run backend because it’s dependent on it to work correctly. The other services are configured just as their properties imply.

But why are we using networks? The reason is that we want our containers in the same network so we can use the service name to achieve what we did on line 6 in /backend/app.js with const DB_URL = 'mongodb://mongodb:27017';.