History of JavaScript Frameworks

by Nicklas EnvallThe e-book is available in EPUB and PDF format at LeanPub. It's a great way to support the project. Your support is highly appreciated.

Table of contents:

- Preface

- Birth of JavaScript Frameworks

- Rise of MV* Frameworks

- The Holy Trinity

- Closer look at Data Binding

- Rise of Compiler Frameworks

- Frameworks within frameworks

- Frameworks solving the Hydration problem

- Do you need a framework?

Preface

There are many frameworks and libraries in the JavaScript ecosystem. Some say we live in the renaissance of JavaScript, an innovative and fast-phased time where old tools continuously get replaced with new tools. A time where we constantly push the limits of our JavaScript applications.

On the other side of the coin, many feel fatigued because the JavaScript ecosystem is massive. In addition, at each corner of GitHub, yet another 10000-star framework that you never heard of awaits you.

But why do we have so many frameworks, and what's their purpose? To better understand the present, we can look back at the past. This book will take you on a journey from the 90s to the now. In our travels, we'll encounter many frameworks and libraries. These meetings will increase our knowledge of their patterns, story, use cases, and more.

Our mission is clear. It is to explore the history of JavaScript frameworks.

Birth of JavaScript Frameworks

JavaScript was created in 1995 and gives us the possibility to make our pages come alive. With it, we can respond to user events, perform animations, validate input, and much more. We use the term dynamic HTML (DHTML) to describe this concept. Yet the magical DHTML wasn't really utilized in the 90s because it was troublesome to write JavaScript that worked well for more than one browser due to incompatible platforms. The platforms' disarray derives from the havoc caused by the browser war. The companies Netscape and Microsoft had deliberately been trying to best each other by creating new features for their browsers to gain the interest of developers, which subsequently led to incompatible platforms — or better put, a minefield. As a result, the developers had to carefully ensure that their code worked for whichever browser their users chose.

Luckily, Netscape and Microsoft created a language specification to ensure interoperable JavaScript implementations and submitted that to ECMA International in 1997. ECMA International is a standard organization that, to this day, releases editions of ECMAScript. So, in principle JavaScript is ECMAScript since ECMAScript became and still is the language's standard specification. New features are proposed to the language continuously and later released in newer ES (abbreviation of ECMAScript) editions. So this is the reason why you see articles written about ES3, ES5, ES6, and so on. Consequently, since browsers implement JavaScript with an engine, often developed by themselves, it's up to them to abide by the specification or not.

The browser war had also come to an end. Microsoft ruthlessly crushed Netscape by giving away Internet Explorer 4 for free, pre-installed with their Windows operating system, leading them to be the victors. Their reward was that Internet Explorer dominated the market almost exclusively for years. Thus innovation halted because from 2001 to 2006, only one new version of Internet Explorer got released. Nevertheless, some sense of stability finally emerged, and more developers could now give DHTML a go. But there were still challenges to overcome because JavaScript was hardly being welcomed with open arms by the developer community. It got ridiculed and regarded as an inferior language that developers used only when they had to add some GUI widget or client-side validation. But through the years, JavaScript has evolved and become widely used during Web development. Even to this day, with its acquired accolades, it's still despised by many. Sometimes that intense dislike toward JavaScript stems from valid points, but sometimes it derives from a lack of understanding. Admittedly, even Brendan Eich designed the language with the intent to make the language approachable and saw Java or C++ as a language for the "experts." JavaScript was treated as the sidekick to Java and still has that stigma.

Nevertheless, the dawn of a new era was upon us when companies began experimenting with a collection of web technologies. Websites had ever since the early 90's been divided into Web pages. Each time a user requested to see a Web page, it would require the browser to do a full page load. Consequently, the experience of navigating a website was not as smooth as using a desktop application. Though the problem not only occurred during navigation, it also happened when sending data to a server. For example, when the user would send a filled-out form to the server, the user would then have to wait for a confirmation page. The annoyance caused developers to experiment with different technologies to see how they could make their websites behave more like desktop applications. Strangely enough, already in 1999, the technology that could solve that issue had been created by Microsoft. Microsofts' Outlook Web Access team had devised a way to send HTTP requests with client-side scripts. That enables the possibility of requesting data from a server via the client, who then can update parts of a page dynamically with the retrieved data without reloading the page. As a result, users could make requests to the server while still being able to interact with the website because the requests got handled in the background. Subsequently, the technology got added to Internet Explorer 5.0. Later the request became referred to as an XMLHttpRequest, which you could initiate via JavaScript. Yet, it took six years until it became widely known to the public.

Around 2005, JavaScript got some much-needed love when a user interface designer named Jesse James Garrett took note of new impressive websites such as Gmail, Google Maps, and Flickr. Those websites utilized XMLHttpRequests and a collection of technologies to create desktop-like applications. So for this collection of web technologies, Garrett coined the term Asynchronous Javascript + XML (AJAX). AJAX enables users to request data from servers without reloading the page. So, developers could use DHTML to update the display with the acquired data from the server response. All of this caused a great awakening even though these techniques had already existed for some time. The awakening caused developers to go on an endeavor to push the limitations of their web applications.

The new dreams and ambitions of developers increased the level of complexity of their projects, while incompatibilities still haunted popular browsers. Furthermore, this is somewhere where frameworks/libraries or so-called AJAX frameworks finally started to go mainstream. Some popular were:

- Prototype.js

- Dojo

- Mootools

- Yahoo! User Interface Library (YUI)

These frameworks sought to solve problems such as:

- Simplifying AJAX and dynamic loading.

- Handling cross-browser events.

- Traversing and manipulating the DOM.

- Browser incompatibilities.

So as we see, there were a lot of frameworks to choose from already during 2000-2005. You can visit the old ajaxpatterns.org site via the Web archives to see for yourself. Now, most of these frameworks have vanished, but there is one library that has survived that era all the way to the now. You've probably heard of it, and that is, of course, jQuery.

In January 2006, the now somewhat infamous jQuery library got released. Its author, John Resig, wanted to make it easier to work with JavaScript in the browser. So by focusing on writing and experimenting with different utilities and tools for DOM interactions with browser incompatibilities in mind, Resig could eventually merge them into a single library called jQuery. Later on, methods for working with AJAX also got added due to requests by users.

The library was successful in getting a large following and making it easier to work with JavaScript. But it also prompted developers to create larger projects. Consequently, that introduced more complexity and a set of problems with maintaining a project. These projects often became unmaintainable by containing lots of so-called spaghetti code. Subsequently, these maintainability problems made it evident that a library like jQuery wasn't enough for what developers were trying to accomplish at the time. There was no clear way out there on how to organize and structure our code.

So, a demand for something that would decrease the complexity and maintainability issues for even bigger projects surfaced. As a result, new JavaScript frameworks trying to solve these maintainability issues began to emerge.

Rise of MV* Frameworks

In 2010, Knockout.js, Backbone.js, and AngularJS joined the scene. They all belong to the MV* (Model View Whatever) family, which means that they take concepts from MVC.

We will now study the MV* family as preparation for the continuation of our framework journey.

What is MV*?

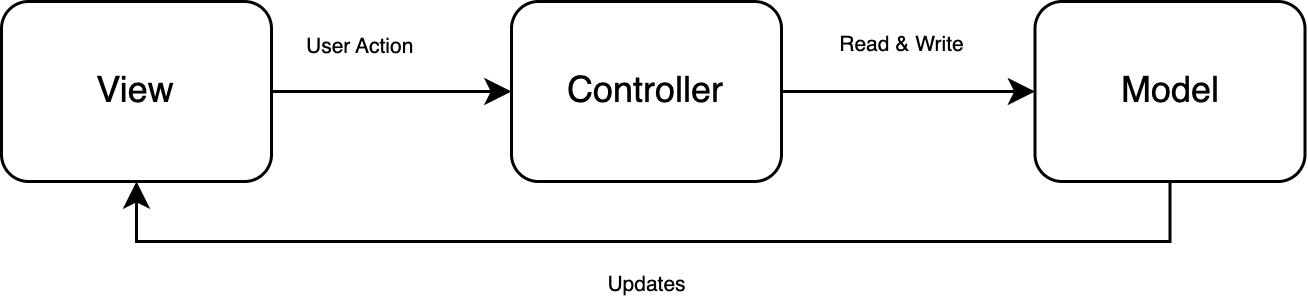

In essence, with Model–view–controller (MVC), the Model contains logic, the View is a presentation for a model, and the Controller is a link between the user and system.

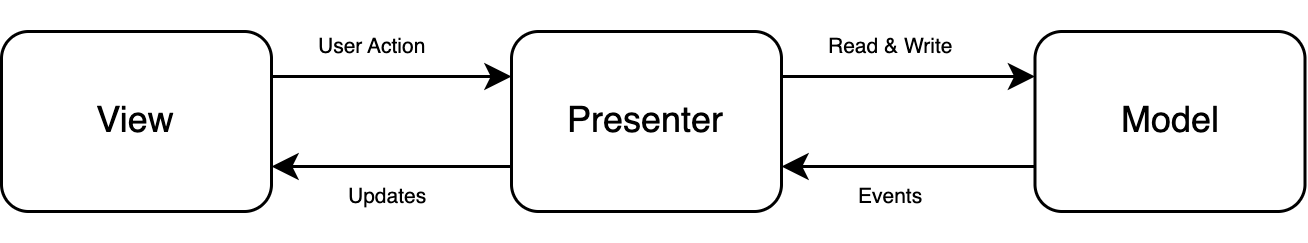

With Model–view–presenter (MVP), the user interacts with the View that then delegates responsibility to the Presenter. The Presenter sits between the View and the Model. The Model itself can trigger events that the Presenter can subscribe to (listen to) and then act accordingly.

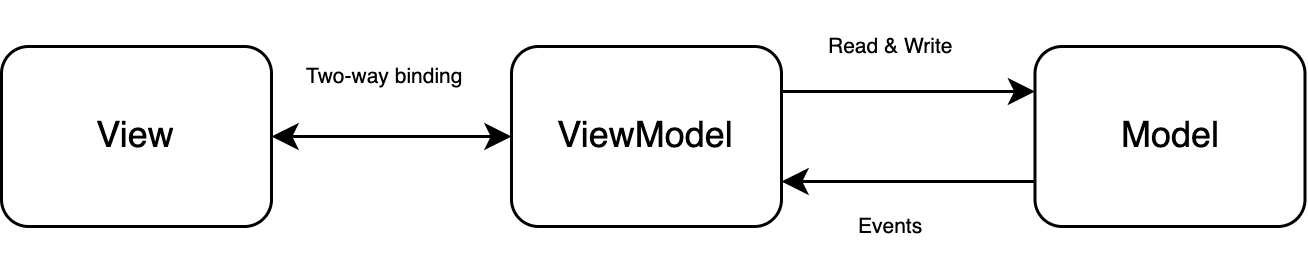

With Model–view–viewmodel (MVVM), the communication between the View and the ViewModel is automated with a binder. So, user interactions get forwarded to the ViewModel with data bindings. Then, the Model itself still contains the business logic or data.

Lastly, we need to understand a key difference between a framework and library. Backbone.js and Knockout.js are libraries, while AngularJS is a framework. Consequently, this means that AngularJS holds strong opinions on the architecture of your application. Backbone.js and Knockout.js give you more freedom in this aspect by putting more responsibility on you.

History of MV* Frameworks

In July 2010, Knockout.js (abbreviated KO) got released. It is a library created by Steve Sanderson, who also made the first version of Blazor. Knockout's foundation is the Model–View–ViewModel (MVVM) design pattern. The pattern gets implemented with observables and bindings.

You bind HTML elements to observables, and then Knockout observes any subsequent changes of that ViewModel's properties. Any changes in the corresponding element automatically get reflected in their model, and vice versa. As a result, we achieve two-way binding.

<script> const viewModel = { price: ko.observable(10), increasePrice = () => { this.price++; }; }; ko.applyBindings(viewModel); </script> <p>The price is <span data-bind="text: price"></span></p> <button data-bind="click: increasePrice">Increase the price</button>

Steve Sanderson never intended to replace jQuery — it was the contrary. Sanderson expected that Knockout and jQuery would likely get used together. Instead, the author's idea was to avoid complicated event handlers and give us a coherent underlying data model that we bind to our user interface. Moreover, it includes several utility functions like array filters and JSON parsing. In conclusion, it is a library that allows us to utilize two-way binding while also leaving a lot of freedom to you on how to structure your project.

In October 2010, Backbone.js got released by the creator of CoffeeScript, Jeremy Ashkenas. Backbone.js' approach to building apps is similar to the Model-View-Presenter (MVP) pattern. The MVP pattern can get implemented by using Backbone.Model for the M, templates (like Underscore/Mustache) for the V, and lastly, despite its name Backbone.View for the P.

Unlike other frameworks and libraries that use a declarative approach to updating the DOM, Backbone.js uses a more imperative one. For instance, look below inside the render method of the Backbone.View. It contains a selector which imperatively updates the DOM (though the template is arguably declarative). In other words, Knockout can solely focus on the model, while with Backbone, you'll have to update the DOM manually.

<div id="price-container"></div> <!-- VIEW --> <script type="text/template" id="price-template"> <p>The price is <%- price %></p> <button id="priceBtn">Increase the price</button> </script> <script> // MODEL const PriceModel = Backbone.Model.extend({ defaults: { price: 10, }, }); // PRESENTER const PriceView = Backbone.View.extend({ el: "#price-container", template: _.template($("#price-template").html()), events: { "click #priceBtn": "increasePrice", }, initialize() { this.listenTo(this.model, "change", this.render); this.render(); // render on create }, render() { const valuesForTemplate = this.model.toJSON(); this.$el.html(this.template(valuesForTemplate)); return this; }, increasePrice() { this.model.set("price", this.model.get("price") + 1); }, }); const priceModel = new PriceModel(); // Start everything by creating the view new PriceView({ model: priceModel }); </script>

Almost a decade ago, Jeremy declared in a presentation about Backbone that Backbone, in a nutshell, aims to provide you with the pieces you need when building a JavaScript application. At its core, a way to work with your data and UI by utilizing models and views. Additionally, Backbone presents more satisfactory methods to manipulate them than just raw JavaScript. Beyond that, it also helps with client-side routing by mapping locations of your app to URLs by providing Backbone.Router. So the Backbone library leaves you with a "smörgåsbord" of tools to build small or large applications.

Later in October 2010, the AngularJS framework got released. AngularJS was started as a side project by Miško Hevery and Adam Abrons. AngularJS' foundation is the MVW pattern. It implements the MVW pattern with a view, a model, and a controller. For the view, AngularJS extends the capabilities of HTML by adding attributes prefixed with ng- to elements called directives. Directives provided a convenient way to bind HTML to JavaScript objects, yet at the time, it provoked debate if we really should extend HTML or not.

Nonetheless, developers were still impressed by the two-way data binding that AngularJS delivered. Because to control the data, you would create a controller that you attached to the DOM by adding the ng-controller directive to an element. Later, the controller receives a $scope object (symbolizing the module) that glues the view and controller together. As a result, the controller can solely focus on the model.

<script> const app = angular.module("priceApp", []); app.controller("priceController", ($scope) => { $scope.price = 10; $scope.msg = "hello"; $scope.increasePrice = () => $scope.price++; }); </script> <div ng-app="priceApp" ng-controller="priceController"> <!-- one-way binding --> <p>{{msg}}</p> <p>The price is {{price}}</p> <!-- Event binding --> <button ng-click="increasePrice()">Increase the price</button> <!-- two-way data binding --> <input ng-model="msg" /> </div>

As the story goes, Miško worked at Google and had been working on a project called Google Feedback for six months that had amassed over 17000 lines of code. Accordingly, testing and adding new features to the project became increasingly difficult. However, Miško thought it was possible to rewrite the project in two weeks with AngularJS. So a manager at Google called Brad Green agreed to a bet with Miško on that. Ultimately, Green won the bet. But Miško was able to complete the task in 3 weeks.

Although perhaps even more impressive than the short time span was that Miško had reduced the codebase to 1500 lines of code. Yet, Google was not ready to support it despite the success of this particular project. So AngularJS was open-sourced. Consequently, other developers got a chance to try it out, and one of them was Marc Jacobs, who somewhat ironically worked at Google. Google had acquired the company DoubleClick and began rewriting the project. Now, Marc Jacobs worked as a tech lead on that project and decided to use AngularJS. In the end, that project was also successful, and ever since, AngularJS has been used internally and maintained by Google.

So, the experience from Miško's project at Google is one of the reasons why AngularJS also puts a great deal of effort into testing, which we haven't covered here. Moreover, AngularJS uses dependency injection (DI) to achieve inversion of control (IoC). In conclusion, AngularJS is a framework with strong opinions that gives you many built-in tools.

Even more

In 2011, Ember.js got released. Its original name was SproutCore MVC framework but later changed to Ember. Ember is a highly opinionated framework based on the Model-View-ViewModel (MVVM) pattern. It also is dependent on micro libraries for its structure. Furthermore, Ember has been used by companies like Apple Music, LinkedIn, and Twitch. The authors came from the Ruby on Rails community that prefers convention over configuration, a mindset that they adopted to Ember. This adoption means that Ember makes a lot of assumptions about how a project should be structured and abstract boilerplate away from the developer.

In 2012, Meteor.js was released (first named Skybreak in 2011). Meteor.js is an isomorphic framework which allows us to write JavaScript for both the client and server. However, Meteor is more like a platform, and after you install it, you will use the Meteor CLI to set up a project, run the app, compile and minify scripts and styles, etc. Moreover, many developers were interested in Meteor when it first was released, but now it seems that the interest has faded, at least from the mainstream.

The Holy Trinity

New frameworks solved many maintainability issues. Yet, we were at a crossroads again. The developers had got a taste of what was possible, so a desire to create even more interactive web apps arose. The problem was that current frameworks at the time were not satisfactory enough because they emphasized structure and the Model layer.

Thus, the holy trinity entered the picture. The holy trinity consists of React, Vue, and a fully updated AngularJS called Angular. Angular is a full-fledged framework, while Vue and React are concerned with the view layer. For example, React helps you efficiently develop user interfaces while leaving concerns like routing, state management, and API interactions up to you to solve with the help of the ecosystem.

Secondly, view-layer-centered libraries are not only used for new projects but also for old projects as a means to update an existing codebase incrementally. For example, adding a React component at a time, and by doing so, you are slowly migrating to React. The caveat with something like React is that there are many different state management libraries to choose from, and architectures can differ significantly between companies and projects.

React

In 2013, React got released by Facebook. It's a library, although many frequently call it a framework. Nevertheless, React is the V in MVC and only deals with the view layer. The views get written with JSX (JavaScript XML), which conveniently allows writing JS directly in our HTML.

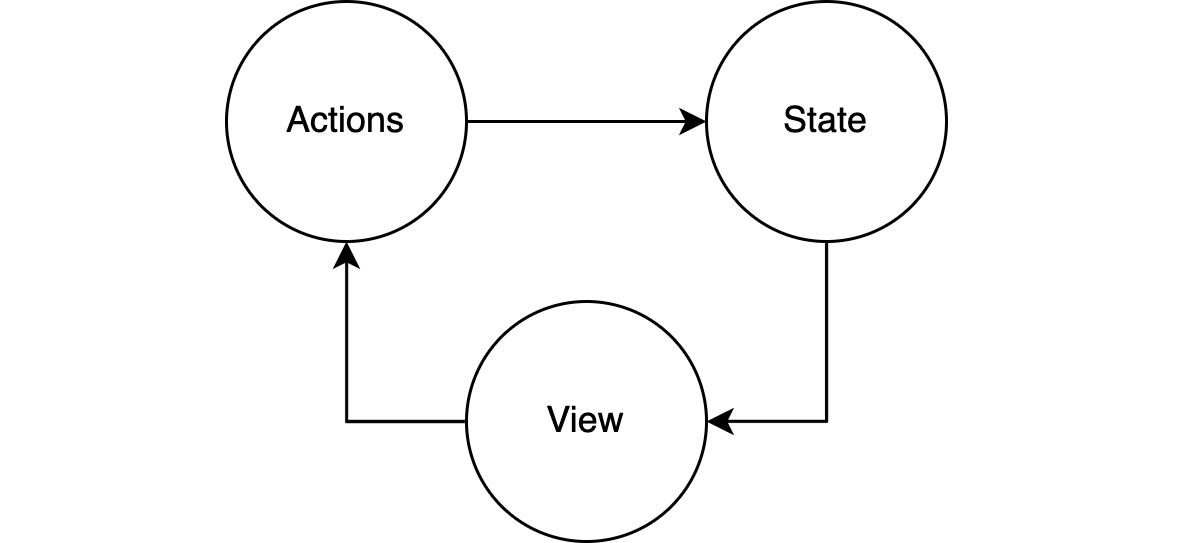

What distinguished React is that data flows in one direction. We have state, views, and events. The view triggers an action that updates the state, and when the state updates, the view gets re-rendered with the new state. The advantage is that we get a single-source of truth. In other words, we don't have to worry about multiple event handlers.

In React's early days, it relied on class-components:

export default class App extends React.Component { constructor(props) { super(props); this.state = { price: 10, }; this.increasePrice = this.increasePrice.bind(this); } increasePrice() { this.setState((prevState) => ({ price: prevState.price + 1, })); } render() { return ( <div> <p>The price is {this.state.price}</p> <button onClick={this.increasePrice}>Increase the price</button> </div> ); } }

Later with React v16.8.0, stateful components got introduced with the advent of hooks. A hook is a function that is used within a function component to "hook into" React state and lifecycle methods.

export default function App() { const [price, setPrice] = useState(10); return ( <div> <p>The price is {price}</p> <button onClick={() => setPrice(price + 1)}>Increase the price</button> </div> ); }

React also has an algorithm called reconciliation. The algorithm's purpose is to mitigate expensive DOM manipulation. It includes a so-called Virtual DOM (VDOM), which mirrors the DOM in memory. When an update occurs (like using setState), the reconciler creates a new VDOM and compares that with the previous VDOM. Then, a batch update of the changes gets sent to the React Renderer.

With version 16 of React, they also released React Fiber. A rewrite of React's core — which enabled incremental rendering. In short, it makes it possible to break rendering work into chunks. Lastly, even though React does not offer things like routing, it does offer a large community and a rich ecosystem.

Vue

In 2014, Vue got released by former Google employee Evan You. Vue's heart is the ViewModel in the MVVM pattern. Vue makes it easy to create a ViewModel instance to utilize two-way binding for your view and model. For the view, Vue extends the capabilities of HTML by adding attributes prefixed with v- much like Angular.

A Vue component uses a watcher to know when to re-render based on updated properties. That is made possible with Vue's reactivity system. The reactivity system implemented in Vue 2 is different from Vue 3. Vue 2 introduces reactivity with getter/setters by using Object.defineProperty. Vue 3, on the other hand, uses ES6 proxies for its reactivity, where it, for example, intercepts set operations to know when to re-render the component.

To help Vue know what object to convert into a proxy, we return an object from the data() method, which gets invoked before the component initializes. The properties we add inside that object get referred to as dependencies, and the proxy knows when we either get or set them. Afterward, to use the power of reactivity, we can use things like the computed property.

<div id="app"> <!-- One-way binding --> <p>{{ total }}</p> <p>{{ msg }}</p> <p>{{ price }}</p> <!-- Event binding --> <button v-on:click="increasePrice">Increase the price</button> <!-- Two-way data binding --> <input type="text" v-model="msg" /> </div> <script> new Vue({ el: "#app", data: { price: 10, tax: 1.25, msg: "hello", }, methods: { increasePrice: function () { this.price = this.price + 1; }, }, computed: { total() { return this.price * this.tax; }, }, }); </script>

In the code example above, we see that the total is price multiplied by tax. So each time price gets updated by clicking the button, total will get recomputed based on its predefined formula.

Additionally, you will most likely today use a Vue Single-File Component (SFC) to encapsulate the template, logic, and styling of a Vue component in a single *.vue file:

<template> <p>hello {{ price }}</p> <button @click="increasePrice">Increase the price</button> </template> <script> export default { name: "App", data() { return { price: 10, }; }, methods: { increasePrice() { this.price++; }, }, }; </script>

The API of Vue is deeply influenced by AngularJS, KnockoutJS, Ractive.js, and Rivets.js. Evan You had been using Backbone.js and AngularJS but found that they didn't suit projects with an intense focus on interaction. Evan needed something else and, as a result started building a small utility to solve that issue. Later that utility would eventually grow into the library today known as Vue. Originally it was named Seed.js, but that name already existed on npm, so Evan translated "view" into french, and then Vue was born.

During Vue's early stages, Evan moved on from Google to work at Meteor.js as a developer while still working on Vue outside working hours. Yet, it wasn't until the Laravel founder, Taylor Otwell, tweeted out that Vue was easy to use that Vue started to gain serious traction. Fast-forward to today, and it's referred to as a progressive framework because the core library focuses on the view layer that you then can progress by integrating other projects into your code that expands its capabilities. From what I see, it wasn't until the version 2 docs that they started calling it a progressive framework.

Angular

In 2016, AngularJS had a whole makeover. It became more mobile-oriented, provided a CLI, and was renamed Angular by dropping the "JS." This means that AngularJS is version 1, and Angular is version 2, 3, 4, etc. However, many AngularJS developers weren't comfortable with the transformation because Angular had little resemblance to AngularJS and lacked backward compatibility.

Angular revamped many features with the wisdom acquired from AngularJS and the evolution of the Web. It's important to understand that AngularJS got built with ES5, but Angular could now leverage the new features of ES6. Also, it now uses TypeScript by default instead of JavaScript. Furthermore, while AngularJS used scope and controllers, the new Angular relies on components instead. It even changed the name of common directives. For example, ng-repeat was changed to ngFor. Lastly, Angular came with many changes, like under-the-hood improvements.

Angular also recommends using reactive-style programming with the help of the Observer Pattern. You can read my article about Design Patterns for more information about the Observer Pattern. Angular uses RxJS (Reactive Extensions for JavaScript) to implement the pattern. The library uses observables and allows us to create asynchronous callbacks with ease. Angular says that they will use RxJS until the likelihood that JavaScript supports it natively.

All of these changes ensured that Angular could remain relevant (sounds harsh, but it's true) even with the rise of Vue and React. Lastly, unlike React and Vue, Angular doesn't use a Virtual DOM because it utilizes an Incremental DOM.

Closer look at Data Binding

Data binding is the operation that creates the connection between the data and UI. Our JavaScript frameworks solve data-binding in different ways. So now we'll delve into the challenges the frameworks have faced when working with data-binding.

Understanding data binding is fundamental to being able to learn new frameworks quickly. Therefore, we'll start by learning the basics of three frameworks' approaches to data binding. Then, we will end the section by looking at the difference between two-way data binding and one-way data binding.

Frameworks in Action

We will now acquaint ourselves with Knockout, Vue, and Angular more practically.

Knockout.js in Action

Knockout has three core features:

- Observables and dependency tracking

- Declarative bindings

- Templating

To ensure that Knockout detects updates of our ViewModel's properties, we need to declare them as observables. We do so by using the ko.observable function.

const viewModel = { message: ko.observable("first message"), };

Do not assign a value to an observable property because that will overwrite the observable. Instead, use the following rules:

viewModel.message("new message"); // SET - OK viewModel.message(); // GET - OK viewModel.message = "new message"; // SET - NO viewModel.message; // GET - NO

Then we use Knockout's binding system to link our ViewModel to the UI. On an HTML element, we add an attribute called data-bind, and within it, we bind a name to a value like name: value:

The message is: <span data-bind="text: message"></span>

We can also add multiple bindings on an element by separating the binding with a comma:

The message is: <span data-bind="text: message, visible: true"></span>

Then finally, we need to activate Knockout, which we do with the ko.applyBindings function:

ko.applyBindings(viewModel);

Knockout offers much more, like observable arrays, computed observables, and subscriptions. Lastly, Knockout only updates the parts of the DOM affected when a change occurs.

Vue in Action

To comprehend Vue clearly, we need to understand directives. Directives are attributes prefixed with v- that inform libraries to do something on a DOM element. There are some key directives to know:

v-bind: for binding attributes, shorthand::attribute="method".v-on: for event handling, shorthand:@event="method"(you can also do@event.modifier)v-model: for easily creating two-way data binding.v-if: conditionally render markup.v-for: used to change the structure of the DOM

Let's not forget lifecycle methods like components, data(), methods, and computed. You add these as properties to the exported object. For instance, components is used to register imported components, data() is the state of a component, methods contain the component's methods. Lastly, computed are similar to React's useEffect and only updates when dependencies update.

The following example highlights how data binding works in Vue. Ordinary JavaScript objects represent models in Vue, and the v-model directive provides a simple way to create two-way binding for elements like <textarea>, <radio>, <select>, and <input>.

<template> <div> <p>{{ inputText }}</p> <input v-model="inputText" /> </div> </template> <script> export default { data() { return { inputText: "", }; }, }; </script>

Vue uses two-way binding inside the component but emits an event to the parent once the value is changed. As a result, the prop never mutates, which may lead to confusing results if incorrectly implemented by the developer that is working with two-way binding between a parent and child. We shall see more two-way binding with Vue when comparing other frameworks.

Angular in Action

With Angular, a component is associated with a template that together defines a view. The component's logic gets put inside a class. Subsequently, Angular diligently uses decorators, and an example is the widely used @Component decorator that defines metadata for a component. A class does not become a component class until we add the @Component decorator.

An Angular component at its core consists of:

- Template: a block of HTML that Angular renders for the associated component.

- Class: defines the behaviour of the component.

- Selector: is what Angular uses to init the component when it finds the element in a template.

- Optional private styling (scoped to that specific component).

As we see in the code example below, the @Component decorator gives us three (but not limited to) properties for defining just that with selector, templateUrl, and styleUrls.

import { Component } from "@angular/core"; @Component({ selector: "app-root", templateUrl: "./app.component.html", styleUrls: ["./app.component.css"], }) export class AppComponent { title = "My app"; }

The selector is what Angular uses to init the component when it finds the element in another template. Based on the example above, the HTML element will look like this:

<app-root></app-root>

We can increase our component's logic to include more behaviour:

@Component({ selector: "app-root", templateUrl: "./app.component.html", styleUrls: ["./app.component.css"], }) export class AppComponent { title = "My app"; username = ""; alertWorld() { alert("Hello world"); } }

Then we can make the connected template come alive with properties from the component and directives:

<div> <h1>Welcome to {{ title }}!</h1> <p>Username: {{ username }}</p> <form> <!-- two-way binding --> <input [(ngModel)]="username" /> </form> <button type="button" (click)="alertWorld()">hello</button> </div>

There are also directives like *ngIf, *ngFor, and more. Perhaps more interesting is that Angular implements the design pattern dependency injection for loosely-coupled communication between classes. Angular does so by using the @Injectable decorator, with which we can inform Angular that a class should be in the DI system.

With this knowledge, we can create a service that we can use for logging:

// logger.service.ts import { Injectable } from "@angular/core"; @Injectable() export class Logger { save(text: string) { console.warn(text); // do something.. } }

Then to inject the service into our AppComponent, we need to supply an argument to the constructor with the dependency type like:

// app.component.ts import { Component } from "@angular/core"; import { Logger } from "./logger.service"; @Component({ selector: "app-root", templateUrl: "./app.component.html", styleUrls: ["./app.component.css"], providers: [Logger] }) export class AppComponent { title = "My app"; username = ""; constructor(private logger: Logger) {} alertWorld() { this.logger.save("hello world executed"); alert("Hello world"); } }

Lastly, a worthy mention is that Angular has put a great effort into testing. You can even find an "Angular testing introduction" on their site.

Two-way data binding vs One-way data binding

Firstly, with Knockout we can create an example that illustrates the difference between two-way binding and one-way binding:

<!-- Two-way binding. After initial setup, it syncs both ways. --> <p>Value 1: <input data-bind="value: value1" /></p> <!-- One-way binding. After initial setup, it syncs from <input> to model. --> <p>Value 2: <input data-bind="value: value2" /></p> <script type="text/javascript"> var viewModel = { value1: ko.observable("First"), // Observable value2: "Second", // Not observable }; </script>

As we see, two-way binding is very convenient when we want to be able to update the model from the view and vice versa. But, the fact that we can update from two directions comes with great responsibility since complexity can arise.

Depending on how a framework approaches data binding, you could end up with an infinite loop or hard-to-reason code. For example, imagine the following scenario:

- Model A updates Model B.

- Model B updates View B.

- View B updates Model B.

- Model B updates Model C.

- Model C updates View C.

- View C Updates Model A.

Two-way binding can also decrease performance. AngularJS (v1.x) has a digest cycle that enables data-binding. To clarify, when you use double brackets {{ }} or reference a variable from a built-in directive (like ng-if="isValid" or ng-repeat) you consequently create a watcher. Then the digest cycle is used to detect changes. During the digest cycle, AngularJS will loop over all watchers and compare previous values of observed expressions with new values.

The reason why this is a problem derives from the fact that AngularJS supports "real" two-way binding. As a result, a single iteration through the digest loop is not enough since the model might update the view and vice versa. So when AngularJS finds a change, it'll trigger the connected code, and then it needs to loop the entire digest cycle again. AngularJS will repeat that process until no changes are detected.

// provokes an infinite digest loop $scope.$watch("counter", function () { $scope.counter = $scope.counter + 1; });

So to address this, Angular (2.x and up) removed two-way binding and now utilizes one-way data binding instead. Currently, with Angular, the data will flow from the model to the view. However, it might be confusing because Angular's documentation says that [(ngModel)] enables two-way binding. In reality, it means that it uses one-way data binding mechanisms to create the appearance of two-way data binding.

<input [ngModel]="username" (ngModelChange)="username = $event" /> <!-- shorthand for above --> <input [(ngModel)]="username" />

Yet the it's not as simple as two-way binding is evil. Evan You, explained when asked about the two-way binding that Vue and Angular (2.x and up) use "two-way binding" as syntax sugar for working with forms. Evan keenly emphasizes that this two-way binding type is a shortcut for a one-way binding used in conjunction with a change listener that modifies the model. Thus, this approach to form handling should not be confused or compared with data-binding between scopes, models, and components.

With that said, in Vue v1.x, it was possible to opt-in to enable two-way binding between components:

<!-- two-way prop binding --> <my-component :prop.sync="someThing"></my-component>

However, in Vue 2, .sync got deprecated. Also, Vue's documentation explicitly declares things like prop-mutation to be an anti-pattern. The switch derives from the fact that two-way binding between components can create maintenance headaches.

On that note, Vue now enforces one-way binding between components since when the parent re-renders, it will overwrite any changes to the prop via the child. So here's an example of what not do:

<!-- App.vue --> <template> <div id="app"> {{ badTwoWayInput }} <input-form v-bind:badTwoWayInput="badTwoWayInput"></input-form> </div> </template> <script> import InputForm from "./components/InputForm"; export default { name: "App", components: { InputForm, }, data() { return { badTwoWayInput: "start value", }; }, }; </script>

<!-- InputForm.vue --> <template> <input v-model="badTwoWayInput" /> </template> <script> export default { props: { badTwoWayInput: String, }, }; </script>

Because this will now throw:

[Vue warn]: Avoid mutating a prop directly since the value will be overwritten whenever the parent component re-renders. Instead, use a data or computed property based on the prop's value. Prop being mutated: "badTwoWayInput"

So the frameworks and libraries often adapt and solve issues that trouble its users, which seems to have been Angular's success at least. My current opinion is that two-way binding as syntax sugar when working with forms is handy and avoids unnecessary event handlers. Meanwhile, two-way binding between components and models is when things get too disorganized.

Rise of Compiler Frameworks

Today, most frontend applications rely on Node.js during development and have a build process. The build process transforms and minifies their code (like JS or CSS) to an optimized production build. So it can be a lot to be aware of when working with JavaScript, and manually doing everything becomes unfeasible as the application grows. So, we rely on things like bundlers, plugins, transpilers, and much more. In this section, we'll look at bundlers and so-called Compiler Frameworks.

Bundler Wars

As a JavaScript developer, you'll likely encounter multiple module formats. The explanation for why this is the case is that JavaScript initially never had a built-in module system. The consequence is that we're now stuck with code and articles that use AMD, CommonJS, UMD, and ES6. But let's take a step back to understand why these exist and how they correlate to how we build applications.

Previously, before module systems, we relied on scripts that lived in the global scope.

<script src="moduleA.js"></script> <script src="moduleB.js"></script>

It was common to use immediately invoked functions expressions to ensure that variables in the file did not pollute the global scope. Note that this was before import and export existed, so clever use of function scope had to do. By using self-invoking functions, we get private scopes from which we may export what we want public. The name for this approach is the revealing module pattern.

// moduleB.js var moduleB = (function () { // globally importing "func" from moduleA var importedFunction = moduleA.func; var privateVariable = "secret"; function getPrivateVariable() { return privateVariable; } return { getPrivateVariable: getPrivateVariable, }; })();

Working directly in the global scope is not good. It's also tedious because we, the developers, are forced to ensure the script files get loaded in the correct order. Rest assured, developers did get tired of waiting for a built-in module system and created their own within the language. As a result, and as mentioned before, we now have multiple different module formats. Some prominent ones are:

- AMD: short for Asynchronous Module Definition, fetches modules asynchronously and was designed to be used in browsers.

- CommonJS: abbreviated CJS, fetches modules synchronously and was designed for server-side (Node supports it by default).

- UMD: is an abbreviation of Universal Module Definition. Its name (universal) derives from the fact that it works on both the front-end and back-end.

- ES6 modules: ES6 finally implements a built-in module system for JS. The format provides an

importandexportsyntax that new JavaScript developers are likely more familiar with than AMD or CJS.

Earlier, we relied on third-party module loaders like RequireJS to load our AMD modules correctly in the browser. Module loaders dynamically load modules when needed during runtime. You can view a module loader as a polyfill for the lack of native support in browsers for loading ES modules. They also help with loading our modules in the correct order, which is good when we separate our modules into files. Especially since we often have one file per module, which adds up to hundreds of files. Requesting all these files does sound like a massive performance hit. However, this is why HTTP/2.0 and module loaders are a great fit. HTTP/2.0 utilizes multiplexing while module loaders fetch files on demand.

Later module bundlers gained traction. At its core module bundlers packet our files together into a single JavaScript file (bundle). The bundle contains our modules and their corresponding dependencies. Bundling lets developers work with multiple files during development and then bundle it all together, adapted and optimized for the browser. Thus, developers may use different module formats and compile-to-js languages during development without requiring much manual labour. The work instead gets done by the bundler that will utilize loaders that transforms individual files adequately.

The release of the Browserify bundler opened up new possibilities because sharing code between the browser and the server became more approachable. We could now write code for the browser with the CommonJS format. Even the package manager npm became commonly used by frontend developers. To name a few build tools, there are:

- Browserify

- Webpack

- Rollup

- Parcel

- Snowpack

- Rome

- esbuild

- Vite

JavaScript-based tooling like Webpack was created in 2012 and has improved the development experience. Likewise, so have bundlers like Rollup and Parcel. So, bundlers like these have pretty much-replaced module loaders. We even have import(), and advanced bundlers can offer code-splitting, which splits code into chunks that can be loaded when needed, which gives us the best of both worlds. They can also do tree shaking, which removes dead code, thus reducing precious bytes.

But you've probably encountered slow builds when bundling or minifying your code. One of the reasons for that is that most bundlers have been written in JavaScript up until recently. These JavaScript-based tools haven't been able to deliver satisfactory performance, leaving us with slow build times as our applications have grown. Consequently, this seems to have ignited a bundler war where most bundlers will show benchmarks comparing their speed to others.

The co-founder of Figma, Evan Wallace, created esbuild in 2020 with the vision of "bringing about a new era of build tool performance." The tool esbuild was 10-100x faster than other commonly used build tools at the time because Evan wrote the tool with build times in mind and did so with the programming language Go. Now, platforms, frameworks, and bundlers have added more effort to reduce their build times. For instance, Rome announced on their Twitter account on 3 Aug 2021 that they are rewriting Rome in Rust. Furthermore, the Speedy Web Compiler (SWC) was released in 2019 by DongYoon Kang.

It seems that the future of JavaScript tooling will not use JavaScript as much, which leads us to the next section nicely.

Are Compilers the new Frameworks?

In the State of the Web report from HTTP Archive, we can see that the average page size is 2.3MB on desktop and 2MB on mobile in July 2022. Perhaps the "Average Page" is a myth and a simplification. Regardless, we can be confident that our frameworks have increased the number of bytes we need to send. In other words, many web apps suffer from JavaScript bloat. It's important to understand that JavaScript is costly, and the process is not complete only because the browser has received the bytes. The code also needs to be parsed and executed.

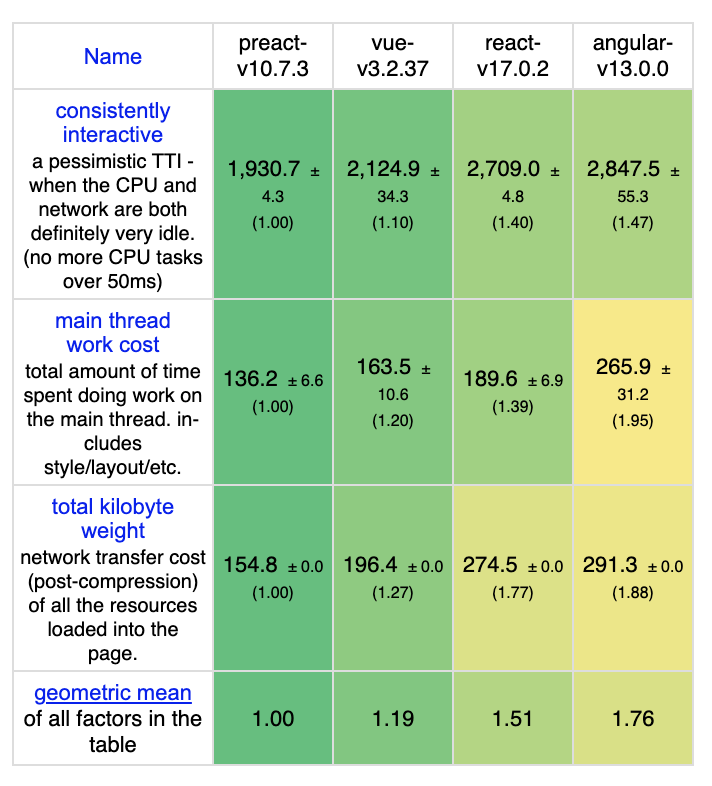

We can get an idea of how this affects our sites by using the result from js-framework-benchmark:

Additionally, according to Bundlephobia (not gzipped), [email protected] (6.4KB) + [email protected] (130.5KB) equals 136.9KB minified. Thus, before you even write a line of code for your React app, it would already contain a lot of JavaScript. Yet, libraries like Preact (a lightweight alternative to React) have emerged, which is only 10.3KB minified with [email protected] on Bundlephobia. So there are alternatives at our disposal to reduce the bits and bytes. In return, that invites the question, is it possible to have the cake and eat it too? Well, it turns out to be the new standard in the future with the help of compilers. For instance, Tom Dale, co-creator of Ember, published an article in 2017 called Compilers are the New Frameworks. Concisely put, Dale wrote about how we have viewed web frameworks as runtime libraries, but they will become compilers in the future.

In 2016, Svelte got released by Rich Harris, the creator of Ractive.js. It has been marketed as the "disappearing framework" because Svelte is a compiler. Svelte compiles your project into optimized vanilla JavaScript at build-time instead of letting the framework do the majority of its work occur in the browser. Moreover, being a compiler, Svelte will packet the code into HTML, CSS, and JS optimized for the browser during compilation. It does all this while sticking to plain old HTML, CSS, and JS while enhancing them for a better developer experience.

Svelte doesn't really disappear entirely because the library is still required. Svelte functions that your app uses will get put into your bundle. That said, the amount of code is small.

In the Rethinking reactivity talk, Rich Harris says that frameworks are tools to organize your mind — not code that runs in the browser. I quote Rich, "the framework should be something that's run in your build-step." This mind shift that both Dale and Harris talk about shows us a future where new frameworks are synonymous with compilers.

As a result of being a compiler, Svelte isn't as constrained by the limitation of JavaScript as most other frameworks are. It can move reactivity into the actual language, while a library like React requires us to use some API like setState. For instance, you can utilize reactive declarations by adding a $ which says "re-run this code whenever any of the referenced values change."

<script> let number = 0; $: squaredNumber = number * number; function incrementNumber() { number += 1; } </script> <button on:click="{incrementNumber}">Increment number</button> <p>{number} squared is {squaredNumber}</p>

Now, Svelte seems very much like a language — and that's because it is. The reality about Svelte is that it's a language, which Harris realized nearing the release of Svelte 3.

So will our future web frameworks be languages?

It might seem strange that a framework turned into a language. But haven't we already been doing this? Just think of transpilation, polyfilling, JSX, minifiers, templating languages (like Mustache), and bundlers. Because, unless you're working in a legacy codebase, you're most likely using these tools. Moreover, most frameworks already rely on some compilation or build-step. For these reasons, a compiler like Svelte seems like a very natural step in the evolution of web frameworks.

Svelte has made its mark and deserves credit, but it turns out that creating a language for describing reactive user interfaces is not something new. For example, look at Elm (2012), Marko (2014), or Imba (2015). With that said, they do differ and have their selling points. But, we will not delve into that because the main point is that we are now relying on compilers/builders even more.

Frameworks within frameworks

Most frameworks like React and Vue rely on Client-Side Rendering (CSR), which entails that you render the application in a browser. Routing, templating, data fetching, and all logic is handled on the client side. This has its downsides, one major being, SEO. It forces crawlers to execute JavaScript to be able to get to the content — which they often skip. Also, because we may change content without updating the URL, it gets harder for the crawler to find and index content.

We of course want our websites to rank well on search engines like Google, so frameworks that allow us to still use SPA frameworks while reaping the benefits of Server-Side Rendering (SSR) and Static Site Generation (SSG) with ease have emerged. For example, Next.js for React, Nuxt.js for Vue, Sapper for Svelte, and Angular Universal for Angular. In the context of SPAs, SSR entails taking client-side templates from the respective SPA and rendering them on the server side. SSR generates the HTML on the server and responds with the HTML to the browser on request. By rendering the required components from the SPA to HTML on the server the search engine crawlers will see the page fully rendered at once.

Then we also have pre-rendering. Pre-rendering means executing an app at build time to capture the initial state as static HTML. This has in recent years become an increasingly more common approach to building websites. This is where the software architecture and philosophy "JAMstack" enters the picture — which has pre-rendering as one of its core principles. The JAM in JAMstack stands for, JavaScript, APIs, and Markup.

The ecosystem contains Static Site Generators that make it easier to build JAMstack websites, such as Next.js, Nuxt.js, Sapper, and Angular Universal yet again. But also Gatsby (React), Gridsome (Vue), and SvelteKit (successor of Sapper). On a side note with the advent of headless CMS we can also avoid coupling the CMS to our projects and instead expose it via an API. Examples of these are Ghost, Strapi, and Netlify CMS. It makes sense to use SSG when your site does not change that much and therefore you can get the data from the database, headless CMS, or filesystem on build to store the static output.

These types of frameworks are often called metaframeworks that utilize so-called component frameworks like React or Svelte. Some offer either SSR or SSG, while some offer both, and they continuously improve and offer new solutions. So, client-side-only is not enough anymore, the future is hybrid according to Dan Abramov), creator of Redux and part of the React team. In the context of JavaScript, we have entered an era where our frameworks have become full-stack frameworks that utilize component frameworks.

Understanding Hydration

These meta frameworks solved our major SEO problem by utilizing SSR or SSG instead of relying too much on CSR. As a result, crawlers are now not required to execute JavaScript to access the content. But, there's a missing piece we haven't covered. We must connect the JavaScript with the HTML sent to the client somehow. Hence, that's where hydration enters the picture.

Hydration adds interactivity to server-rendered HTML by:

- Attaching listeners to DOM nodes to make them interactive.

- Building the internal state of the components of the framework.

The peril of this approach is that the components can only be hydrated once the entire JavaScript file containing the app has been loaded, parsed, and executed. As a result, the user might see a page that looks interactive but is not until 1-2s (or even longer). So, our users receive the static HTML that is not yet hydrated and therefore see a page that deceptively looks interactive. Thus, they get an extremely frustrating experience when trying to interact with a frozen UI. This phase gets referred to as the uncanny valley.

It turns out that perhaps hydration is pure overhead and might not be the most viable solution. For example, a common rehydration pitfall is that the server gathers information about the app when building/rendering it — but does not save it for the client to re-use. So the client will have to rebuild the application again.

Consequently, all these problems hurt our Time to Interactive (TTI), and as we'll see, developers started trying to mitigate this issue.

Frameworks solving the Hydration problem

Techniques and frameworks that mitigate the hydration problem have emerged. Thus, before continuing our framework journey, we'll have to understand some of these techniques at a base level. It's also important to realize that this is a current topic at the time of writing, and therefore the terms can be interpreted as a bit muddy since they get used synonymously in some articles:

-

Standard hydration: entails eager loading and full hydration.

-

Progressive hydration: entails progressively loading code and hydrating pieces of the page (static HTML) to make it interactive when needed based on events/interactions (think visibility or clicks). This method requires splitting your app into chunks carefully beforehand to be beneficial. Because the problem that can occur is that much of the framework will need to be shipped, so you'll have to load your entire app anyways — so the delay can cause more harm by instead doing it upfront.

-

Partial hydration: entails utilizing the server to only send necessary code by analyzing which parts have little to no interactivity or state. For example, if a component only needs to be rendered once, then only send static HTML for that part. But if it's interactive, then also send a so-called island (interactive UI component) as well.

-

Selective hydration (React 18): entails starting hydration before all HTML and JavaScript get fully downloaded. At the same time, it prioritizes hydrating the components that get interacted by the user.

-

Islands Architecture: is often referred to as the same thing as partial hydration, but it's more an approach to site design that uses partial hydration. It's an approach that enables us to ship less JavaScript by utilizing "islands" that give our static HTML interactivity — but can still be fetched independently when needed.

-

Resumability: entails not repeating any work in the browser that already has been done server-side.

Hopefully, this list provides more clarity. With that said, I've seen new frameworks that utilize partial hydration write a "for this article, let's say that partial hydration is X" cop-out when describing partial hydration. However, don't misconstrue this as criticism because the intention is to illustrate that the terms Islands Architecture, partial hydration, and progressive hydration are relatively new and often used synonymously in articles. As a result, things can get disorganized when discussing what these approaches entail.

Despite the terms, their goal (Islands Architecture, partial, and progressive) is essentially to decompose our app into chunks so we can load parts more intelligently based on actual needs (like visibility, a click). But still, they are not the same thing and can independently be approached from different angles. Consequently, frameworks have unique approaches to the problem with standard hydration, which we'll look at in this chapter.

Islands Architecture vs Micro-frontends

Islands Architecture essentially means using partial hydration to separate an app into islands of interactivity that can be progressively loaded/hydrated while favouring SSR over CSR. The story goes that Katie Sylor-Miller coined the "Component Islands" pattern during a meeting with Jason Miller (creator of Preact), who later wrote "Islands Architecture" to describe this approach, which in turn popularized the term. Yet, it's not a new idea to decompose a page of an app into multiple entry points. Other similar approaches already do this, like Micro Frontends.

According to Michael Geers author of "Micro Frontends in Action," the term (not idea) Micro Frontends was coined at the end of 2016 by the team over at ThoughtWorks. Additionally, more technically speaking, Micro Frontends continue the microservice idea to the frontend by viewing our app as an organization of features owned by independent teams. In the list below, we see some Micro Frontend characteristics:

- Technology Agnostic: teams can select and upgrade their stack alone.

- Separate Code: build self-contained apps that do not share state or variables between apps.

- Use Prefixes: utilize naming conventions if you cannot isolate something.

- Prefer Native Browser Features: entails using custom elements or custom events.

- Site Resilience: entails using progressive enhancement.

Both Islands Architecture and Micro Frontends advocate decomposing your UI into independent parts. The fundamental difference is that the Islands Architecture heavily relies on the server to render static HTML regions that later can be hydrated on the client when and if needed. It does so by separating content into two types:

- Static content: is non-interactive and has no state, so no hydration is required.

- Dynamic content: is interactive or has a state, so hydration is required

In summary, Islands Architecture enables us to ship less JavaScript by utilizing "islands" that make parts of our static HTML interactive — while being fetched independently when needed.

Islands Frameworks

I have categorized frameworks that say they implement Islands Architecture as Islands Frameworks. We'll now examine Astro and Fresh, which both use the Islands Architecture with their own approach.

In June 2021, Astro got introduced with its public beta release. Astro is a Static Site Generator (SSG) that utilizes partial hydration. It has an opinionated folder structure, so Astro can provide things like file-based routing that generates URLs based on the /src/pages/ folder (like Next.js). Additionally, one of its other selling points is that it's framework-agnostic and lets you "Bring Your Own Framework" (BYOF) like React, Preact, Svelte, Vue, etc.

To enable BYOF, Astro includes two types of components. Firstly, Astro Components (.astro) are the building blocks of an Astro project. Secondly, Frontend Components allow you to use components from other frameworks within Astro components.

--- // src/pages/MultipleFrameworksWithAstroPage.astro import SvelteComponent from '../components/SvelteComponent.svelte'; import ReactComponent from '../components/ReactComponent.tsx'; import VueComponent from '../components/VueComponent.vue'; --- <div> <SvelteComponent /> <ReactComponent /> <VueComponent client:visible/> </div>

It's important to realize that the Framework Component can require some renderer from a framework/library on top of its component code. Then, you would have to download that as well. As a consequence of this, the page above might have to download code from three different frameworks. With that said, you can use Client Directives to control how the Framework Components are hydrated. An example of directives is the client:visible above, which ensures that JS is loaded when the user scrolls that component into view. Although, you should still watch out for Micro frontend anarchy. Lastly, you can enable SSR with Astro by using an adapter while SSG is out of the box.

In June 28, 2022, Fresh got released. Fresh is a full stack framework that uses Preact, JSX, and the Deno runtime. It embraces Islands Architecture by rendering all pages with SSR while allowing you to create islands with Preact components to enable client-side interactivity. Also, like Astro, a Fresh project has an opinionated folder structure, which notably includes /static, /islands, and /routes.

The /routes folder enables multiple features like file-system routing and dynamic routes (think /routes/posts/[name].tsx). Moreover, the /routes folder can have a handler and a component, where you must have one or both. The handlers enable the possibility to have API routes that return things like JSON or fetch data before rendering the JSX. The following is a simplified example of a handler that fetches some data and passes it via the ctx.render function as props.data to the component that is going to get rendered for the /contact route:

// /routes/contact.tsx /** @jsx h */ import { h } from "preact"; import { Handlers, PageProps } from "$fresh/server.ts"; interface ContactDetail { name: string; phone: string; } export const handler: Handlers<ContactDetail[] | null> = { async GET(_, ctx) { const res = await fetch("https://example.com/contacts"); const data = await res.json(); return ctx.render(data); }, }; export default function ContactPage({ data, }: PageProps<ContactDetail[] | null>) { if (!data) { return <h1>Contacts not found</h1>; } return ( <main> <h1>Contact</h1> <p>{JSON.stringify(data)}</p> </main> ); }

Then, to add interactivity to the page, we would create a Preact component inside the /islands folder and then simply import that component into /routes/contact.tsx.

Fresh also takes progressive enhancement seriously by having its form submission infrastructure built around the native <form> element. If needed, you can make your forms more interactive by using islands. Lastly, Fresh does not have a build step that, in return, enables instant deployments. But be aware that Fresh does not support SSG.

The Resumable Framework Qwik

Islands aren't perfect and come with challenges, such as how to do inter-island communication, so even Islands Architecture might not be the answer. Perhaps we could instead resume the execution in the browser where the server left off?

Miško Hevery, the co-creator of Angular, is back with a new framework called Qwik known as "The Resumable Framework." Qwik might have the answer to the hydration problem with their newly coined term resumability. Qwik can resume the execution from where the server left off by moving information gathered during SSR into the HTML so that the client can utilize it.

To understand what that means, let's inspect a simplified flow:

- The server serializes listeners, internal data structures, and state into the HTML.

- The browser gets the HTML that contains a less than 1KB inlined JS-script referred to as the Qwikloader.

- The Qwikloader registers a single global event listener that awaits to be notified of which code chunk to download.

Initially, you would have to parse no JavaScript except the minuscule Qwikloader. The reason is to achieve an interactive state instantaneously by delaying the execution of JavaScript as much as possible. So, the Qwik server will serialize events handlers into the DOM similarly to this:

<button onClickQrl="./chunk.js#handler_symbol">click me</button> <button onClickQrl="./chunk.js#handler_other_symbol">click me</button>

Then, the Qwikloader will only load the code for the corresponding event handler once you click it. It does so with the help of the URL that the server attached to the HTML element. So if you don't click it, it will not download the unnecessary bytes.

There's more to Qwik, like its use of preloads and prefetches. But, the key point to understand about Qwik is that the server moves essential information into the HTML so that the client can make more intelligent choices.

Do you need a framework?

The answer is that it depends. You probably don't need a framework or library, but they can make your life easier. Frameworks did not get created to solve your exact problems. They also make no commitment to you and your project. With that said, frameworks come with benefits as well. But that doesn't mean we need to create our own framework. Instead, we should be aware of the context and pros and cons.

Ask yourself, what makes sense for your project? Here are some questions you could ask yourself:

- Does the framework violate WCAG?

- How crucial is SEO?

- Is it easy to maintain?

- What does your team think about it?

- Do you have a team?

- How interactive is your app?

- Static or SSR, or why not both?

- Can you create an architectural boundary for your business objects?

- Can you learn something from using it?

- Is the framework still maintained?

- How is the framework maintained?

So instead of asking which JavaScript framework is the best. We should ask what JavaScript framework suits our project the best. The "the right tool for the job" mindset really makes sense here. For instance, if you're building an app used internally, then SEO probably does not matter as much as interactivity. While if you have an e-commerce, then you likely need both. Moreover, perhaps you're not building your own project. You might be looking for a job, and in that case, React could be your best bet.

However, choosing the right tool might not be that easy when there are so many to choose from. We've talked about jQuery, Knockout, Backbone, AngularJS, Ember, Meteor, React, Vue, Angular, Svelte, Astro, Fresh, and Qwik. Yet we've probably haven't even talked about half, to name a few Mithril.js, SolidJS, CanJS, Hyperapp, Scully, Remix, Elder.js, and many more.

Additionally, what makes a framework good can be very subjective, and experienced developers can often be persuasive in their reasoning as to why their framework is the best. Their persuasiveness and buzzwords, combined with our JavaScript fatigue, often lead us to accept their pitch. Although that is not to say that their pitch must be bad or wrong, they might be correct. The fact that people in our community actually care about things makes our experience more vibrant and intriguing. Also, the abundance of frameworks shows that people are willing to contribute and innovation is alive.

You could look at it more negatively and make arguments that likely would make sense. The intent of this book was never to give you an ultimate answer to what framework is the best because there is none as of date. With our journey, you have hopefully cultivated a repertoire of knowledge of the past and present. To better navigate the great sea of JavaScript frameworks.

About the Author

My name is Nicklas Envall, and I'm a software developer. I've had an interest in websites ever since a young age. The purpose of a website can be many different things, and the fact that you can create one yourself has always intrigued me. Today I work as a developer, and I'll occasionally write about tech during my free time at https://programmingsoup.com.